Distributed learning: Difference between revisions

(Q1 commit 1) |

(Added Summary of survey and CIDR DLSF) |

||

| (4 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

{{DISPLAYTITLE:Distributed Machine Learning}} | |||

The use of artificial intelligence (AI) in extreme edge devices such as wireless sensor nodes (WSNs) will greatly benefit its scalability and application space. AI can be applied to solve problems with clustering, data routing, and most importantly it can be used to reduce the volume of data transmission via data compression or making conclusions from data within the node itself <ref>Alsheikh, Mohammad Abu, et al. "Machine learning in wireless sensor networks: Algorithms, strategies, and applications." ''IEEE Communications Surveys & Tutorials'' 16.4 (2014): 1996-2018.</ref>. | The use of artificial intelligence (AI) in extreme edge devices such as wireless sensor nodes (WSNs) will greatly benefit its scalability and application space. AI can be applied to solve problems with clustering, data routing, and most importantly it can be used to reduce the volume of data transmission via data compression or making conclusions from data within the node itself <ref>Alsheikh, Mohammad Abu, et al. "Machine learning in wireless sensor networks: Algorithms, strategies, and applications." ''IEEE Communications Surveys & Tutorials'' 16.4 (2014): 1996-2018.</ref>. | ||

| Line 36: | Line 38: | ||

Zhou '21 describes edge AI as using widespread edge resources to gain AI insight. This means not only running an AI algorithm on one node, but potentially cooperatively running inference and training on multiple nodes. There are several degrees to the concept, ranging from training and inference in the server, training in the server but inference in the node, and running both training and inference on the node itself. Ideally, for the highest scalability and lowest communication overhead, training and inference ideally take place in the nodes. | Zhou '21 describes edge AI as using widespread edge resources to gain AI insight. This means not only running an AI algorithm on one node, but potentially cooperatively running inference and training on multiple nodes. There are several degrees to the concept, ranging from training and inference in the server, training in the server but inference in the node, and running both training and inference on the node itself. Ideally, for the highest scalability and lowest communication overhead, training and inference ideally take place in the nodes. | ||

== TinyML and accepted benchmarks for Edge AI == | == Distributed Data Processing Survey == | ||

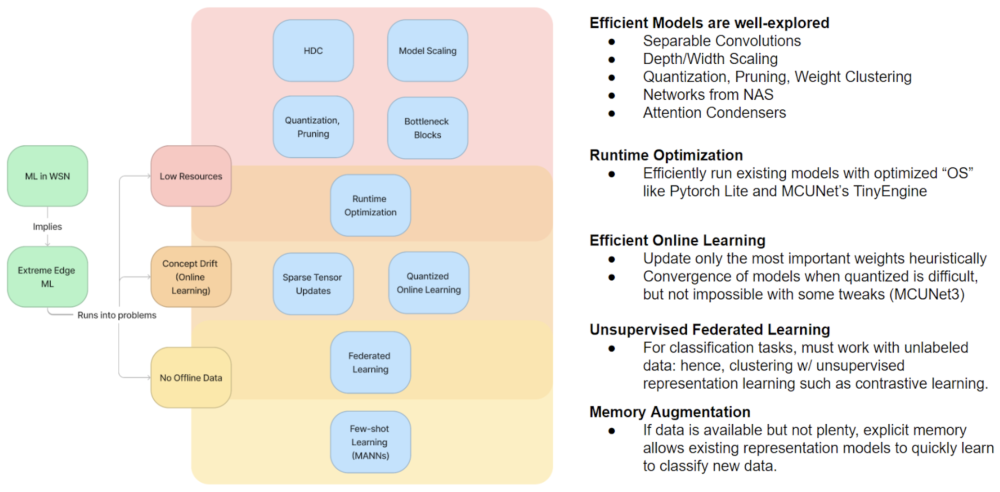

Running ML in WSN can encounter three problems: resource constraints, concept drift and lack of data for certain applications. From our survey, we found concepts for efficient computer vision that can be applied to most neural-network based ML, and further identified HDC as a good resource-efficient ML algorithm that can be hardware-accelerated. Online learning can be performed with as few resources as possible through the use of efficient libraries and heuristics by training only the most important parts of the model. | |||

[[File:Conceptual Summary of Distributed Learning.png|center|thumb|1000x1000px|Figure 1. Conceptual Summary of Surveyed Algorithms in Distributed Data Processing.]] | |||

=== TinyML and accepted benchmarks for Edge AI === | |||

The [https://www.tinyml.org/ TinyML organization] describes tinyML as the "field of machine learning technologies and applications including hardware, algorithms and software capable of performing on-device sensor data analytics at extremely low power, typically in the mW range and below, and hence enabling a variety of always-on use-cases and targeting battery operated devices". | The [https://www.tinyml.org/ TinyML organization] describes tinyML as the "field of machine learning technologies and applications including hardware, algorithms and software capable of performing on-device sensor data analytics at extremely low power, typically in the mW range and below, and hence enabling a variety of always-on use-cases and targeting battery operated devices". | ||

| Line 75: | Line 80: | ||

Anomaly detection is a similar task, where . This task covers cases where both the conclusions required and the input data are likely to be simple, but stakes are slightly higher where false positives and negatives must be minimized. | Anomaly detection is a similar task, where . This task covers cases where both the conclusions required and the input data are likely to be simple, but stakes are slightly higher where false positives and negatives must be minimized. | ||

= Efficient Software Models = | === Efficient Software Models === | ||

=== State of the Art: Efficient Vision Models === | ==== State of the Art: Efficient Vision Models ==== | ||

Traditional ML techniques struggle most with high-dimension image classification tasks. This is the main task for which heavily parametrized resource-heavy neural networks are used. Additionally, similar architectures are applied to reinforcement learning (such as the one for AlphaZero)<ref>Silver, David, et al. "A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play." Science 362.6419 (2018): 1140-1144.</ref>, and so similar efficient architectures are needed for running RL clustering models. | Traditional ML techniques struggle most with high-dimension image classification tasks. This is the main task for which heavily parametrized resource-heavy neural networks are used. Additionally, similar architectures are applied to reinforcement learning (such as the one for AlphaZero)<ref>Silver, David, et al. "A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play." Science 362.6419 (2018): 1140-1144.</ref>, and so similar efficient architectures are needed for running RL clustering models. | ||

| Line 89: | Line 94: | ||

!#FLOPs | !#FLOPs | ||

|- | |- | ||

| rowspan="3" | | | rowspan="3" |Residual Blocks & Skip Connections | ||

| rowspan="3" |ResNet-18 | | rowspan="3" |ResNet-18 | ||

|CIFAR-10 | |CIFAR-10 | ||

| Line 102: | Line 107: | ||

|72.33%/91.8% (SAMix Augmented Data) | |72.33%/91.8% (SAMix Augmented Data) | ||

|- | |- | ||

| | |Linear Bottleneck Blocks | ||

|MobileNetv2 | |MobileNetv2 | ||

|ImageNet | |ImageNet | ||

| Line 109: | Line 114: | ||

|300M | |300M | ||

|- | |- | ||

| rowspan="2" | | | rowspan="2" |Efficiently Scaled Model Depth & Width | ||

| rowspan="2" |EfficientNetB0 | | rowspan="2" |EfficientNetB0 | ||

|CIFAR-10 | |CIFAR-10 | ||

| Line 119: | Line 124: | ||

|'''77.1%/93.3% (Main Paper)''' | |'''77.1%/93.3% (Main Paper)''' | ||

|- | |- | ||

| rowspan="2" |Grouped | | rowspan="2" |Grouped Pointwise Convolutions | ||

|kEffNet-B0 16ch | |kEffNet-B0 16ch | ||

|CIFAR-10/100 | |CIFAR-10/100 | ||

| Line 132: | Line 137: | ||

|'''81M''' | |'''81M''' | ||

|- | |- | ||

| | |Neural Architecture Search | ||

|MCUNetv2M4 | |MCUNetv2M4 | ||

|ImageNet | |ImageNet | ||

| Line 139: | Line 144: | ||

|119M | |119M | ||

|- | |- | ||

| | |Neural Architecture Search | ||

|MCUNetv2H7 | |MCUNetv2H7 | ||

|ImageNet | |ImageNet | ||

| Line 147: | Line 152: | ||

|} | |} | ||

=== Principles of Efficient Inference === | ==== Principles of Efficient Inference ==== | ||

Convolutional neural networks (CNNs) are widely used for any task with data that come with spatial relations, such as images or time-based data. | Convolutional neural networks (CNNs) are widely used for any task with data that come with spatial relations, such as images or time-based data. | ||

| Line 160: | Line 165: | ||

Apart from those, NAS is an approach where heuristic algorithms are used to find accurate models with parameter count constraints ('''ProxylessNAS, MCUNet''') by efficiently estimating the probability of high accuracy (on a specific task) from a candidate model before training and optimizing search spaces.<ref>Lin, Ji, et al. "Mcunet: Tiny deep learning on iot devices." ''Advances in Neural Information Processing Systems'' 33 (2020): 11711-11722.</ref><ref>Cai, Han, Ligeng Zhu, and Song Han. "Proxylessnas: Direct neural architecture search on target task and hardware." ''arXiv preprint arXiv:1812.00332'' (2018).</ref> | Apart from those, NAS is an approach where heuristic algorithms are used to find accurate models with parameter count constraints ('''ProxylessNAS, MCUNet''') by efficiently estimating the probability of high accuracy (on a specific task) from a candidate model before training and optimizing search spaces.<ref>Lin, Ji, et al. "Mcunet: Tiny deep learning on iot devices." ''Advances in Neural Information Processing Systems'' 33 (2020): 11711-11722.</ref><ref>Cai, Han, Ligeng Zhu, and Song Han. "Proxylessnas: Direct neural architecture search on target task and hardware." ''arXiv preprint arXiv:1812.00332'' (2018).</ref> | ||

= Running efficient software in small hardware = | === Running efficient software in small hardware === | ||

=== State of the Art: Models demonstrated in microcontrollers === | ==== State of the Art: Models demonstrated in microcontrollers ==== | ||

Microcontrollers are devices that can be used for IoT and also as wireless sensors. Works in the TinyML field tend to demonstrate their works on resource-constrained microcontrollers, the best of whom are summarized here. | Microcontrollers are devices that can be used for IoT and also as wireless sensors. Works in the TinyML field tend to demonstrate their works on resource-constrained microcontrollers, the best of whom are summarized here. | ||

| Line 182: | Line 187: | ||

|- | |- | ||

|VWW | |VWW | ||

|80 | |80% | ||

|151.63ms | |151.63ms | ||

|4.03mJ | |4.03mJ | ||

| Line 193: | Line 198: | ||

|- | |- | ||

|CF10 | |CF10 | ||

|85 | |85% | ||

|158.13ms | |158.13ms | ||

|4.15mJ | |4.15mJ | ||

|- | |- | ||

|Speech Commands | |Speech Commands | ||

|90 | |90% | ||

|54.81ms | |54.81ms | ||

|1.48mJ | |1.48mJ | ||

|- | |- | ||

|ToyADMOS Car | |ToyADMOS Car | ||

| | |85% | ||

|5.73ms | |5.73ms | ||

|0.152mJ | |0.152mJ | ||

|- | |- | ||

|VWW | |VWW | ||

|80 | |80% | ||

|186ms | |186ms | ||

|1.721mJ | |1.721mJ | ||

| Line 219: | Line 224: | ||

|- | |- | ||

|CF10 | |CF10 | ||

|85 | |85% | ||

|240ms | |240ms | ||

|2.248mJ | |2.248mJ | ||

|- | |- | ||

|Speech Commands | |Speech Commands | ||

|90 | |90% | ||

|63.1ms | |63.1ms | ||

|0.611mJ | |0.611mJ | ||

|- | |- | ||

|ToyADMOS Car | |ToyADMOS Car | ||

| | |85% | ||

|5.41ms | |5.41ms | ||

|0.045mJ | |0.045mJ | ||

|- | |- | ||

|'''CF10''' | |'''CF10''' | ||

|'''84.5''' | |'''84.5%''' | ||

|'''1.5ms''' | |'''1.5ms''' | ||

|'''2.535mJ''' | |'''2.535mJ''' | ||

| Line 245: | Line 250: | ||

|- | |- | ||

|'''Speech Commands''' | |'''Speech Commands''' | ||

|'''82.5''' | |'''82.5%''' | ||

|'''0.033ms''' | |'''0.033ms''' | ||

|'''0.0537mJ''' | |'''0.0537mJ''' | ||

| Line 252: | Line 257: | ||

|- | |- | ||

|'''ToyADMOS Car''' | |'''ToyADMOS Car''' | ||

| | |83% | ||

|'''0.019ms''' | |'''0.019ms''' | ||

|'''0.03mJ''' | |'''0.03mJ''' | ||

| Line 354: | Line 359: | ||

|} | |} | ||

= Online learning & Learning with little data = | === Hyperdimensional Computing<ref>Kanerva, P. (2009). Hyperdimensional Computing: An Introduction to Computing in Distributed Representation with High-Dimensional Random Vectors. Cognitive Computation, 1(2), 139–159.</ref> === | ||

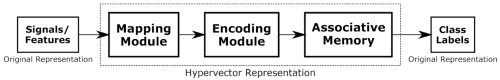

Hyperdimensional computing (HDC) is an ML paradigm where sets of data are encoded into a single high-dimensional vector (hypervector or HV). If the HV is encoded properly, HVs of closely related data will be very similar to each other and very different from HVs of unrelated data. This property is taken advantage of for the ML task of clustering or classification. HDC is very efficient not only because of its single-operation classification, but also because it can be reduced into binary precision for which efficient dedicated accelerators can be made. | |||

[[File:Gen Arch of HDC.png|thumb|500x500px|Figure 2. General Architecture of HDC.]] | |||

The general architecture of an HDC is shown in Figure 2. The mapping module assigns or maps a hypervector to each feature of the system. These hypervectors are then manipulated in the encoding module using the bundling and binding operations. During the training phase, the result of the encoding module is stored in the associative memory, where each class or category has a unique class hypervector. During the inference or testing phase, the result of the encoding module is compared with the stored hypervectors in the associative memory. The class with the highest similarity becomes the predicted class of the input. | |||

=== Online learning & Learning with little data === | |||

For some tasks, the ML model may need to be retrained on the spot on new data to account for changes in the environment or, more realistically, lack of sensor calibration for wireless sensor nodes. This task is known as Online Learning. Training is much more resource intensive than inference, making this task more challenging. This is addressed by using much more efficient ML models such as the ones surveyed leading to much less training parameters needed or by using efficient training software like that of MCUNet’s TinyLearningEngine. | |||

If enough data is available to pretrain a WSN, the technique called '''''transfer learning and classifier tuning''''' <ref>Ji Lin et al. “On-device training under 256kb memory”. In: arXiv preprint arXiv:2206.15472 (2022). </ref>can be used. By pretraining a network on a general but difficult task, then freezing (not training) parameters of the earlier layers while fine-tuning or retraining only the last few layers of the AI model, online learning can be done much faster with data and using fewer resources. | |||

If available data is not enough, the concept of explicit memory from '''''memory augmented neural networks'''''<ref>Geethan Karunaratne et al. “Robust high-dimensional memory-augmented neural networks”. In: Na- ture communications 12.1 (2021), p. 2468.</ref> can be used to store intermediate representations of data which can be compared to representation of new data to quickly learn to classify them. | |||

In some extreme cases, we may desire to use WSNs in tasks for which no available related dataset exists. In this case, the ML algorithm to be used inside the WSN nodes must be trained with data on-the-spot. However, a weak WSN node on its own cannot train a full model. '''''Federated learning'''''<ref>Brendan McMahan et al. “Communication-efficient learning of deep networks from decentralized data”. In: Artificial intelligence and statistics. PMLR. 2017, pp. 1273–1282. </ref> allows a heterogeneous set of computing nodes to collectively train a model using data that is obtained by the nodes themselves. Federated learning works by running similar online learning AI model architectures called the “backbone” in many devices at once, then sending individual devices’ model updates (that is, parameters or gradients thereof) to a central host and then aggregating them. A powerful trained model can be created in the host which can then distribute the better model. | |||

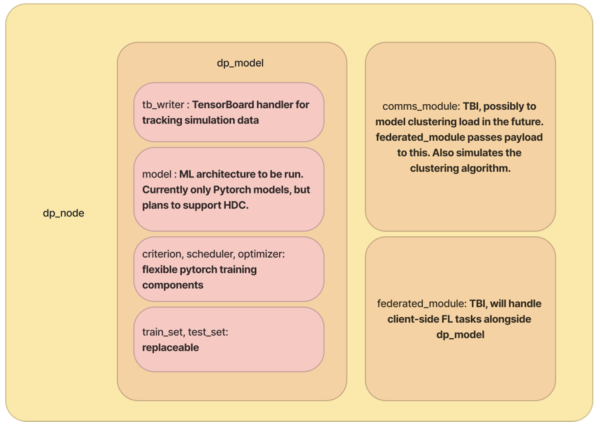

== CIDR Distributed Learning Simulation Framework == | |||

To introduce uniformity, add reproducibility, and interpretability to the simulations and the simulation code, we designed a simulation framework which we call the CIDR distributed learning (CIDR DL) simulation framework. The framework applies a Python object-oriented frontend to the necessary tools, like Pytorch<ref>PySyft. url: <nowiki>https://github.com/OpenMined/PySyft</nowiki>.</ref> for tensor computations and Flower<ref>Ekdeep Singh Lubana et al. “Orchestra: Unsupervised federated learning via globally consistent clustering”. In: arXiv preprint arXiv:2205.11506 (2022).</ref> for federated learning. Later on, we plan to include quantization and efficient inference compilers like MCUNet off the box. | |||

The current structure, along with the class attributes that are yet to be implemented (TBI), are shown in Figure 3. In the framework, the base object is a distributed processing node (dp_node) which can contain a distributed processing model (dp_model) along with a communications module (comms_module) and a module that handles the federated learning tasks (federated_module). The dp_model class contains all of the objects necessary to run the machine learning hardware. As such, it is the one that uses Pytorch. The comms_module class will later simulate the algorithms for clustering and communication. Structuring it this way will allow us to instantiate multiple distributed processing nodes and simulate the function on the WSN network using a network simulator. | |||

[[File:CIDR DLSF.png|center|thumb|600x600px|Figure 3. CIDR Simulation Framework]] | |||

=== References === | === References === | ||

Latest revision as of 17:29, 28 June 2023

The use of artificial intelligence (AI) in extreme edge devices such as wireless sensor nodes (WSNs) will greatly benefit its scalability and application space. AI can be applied to solve problems with clustering, data routing, and most importantly it can be used to reduce the volume of data transmission via data compression or making conclusions from data within the node itself [1].

At the moment, potential use cases for ML in WSN include, but are not limited to:

- General Pattern Classification (including recognizing speech commands, voice recognition for authentication, bio-signal processing for medical sensors)

- Image Processing (including presence detection a.k.a. visual wake words, car counting for traffic analytics)

- Node routing and scheduling to improve network scalability and lifespan (through the use of RL, see more in clustering).

However, since devices in the extreme edge are constrained to work with extremely low amounts of energy [2], even the simplest AI models are difficult to execute with typical sequential processors. WSNs have memories in the order of kB and clock speeds in the order of kHz to MHz due to energy constraints, rendering them unable to run state-of-the art AI applications.

| Reference WSN Project | Device | CLK | Memory | Secondary Memory | Processor |

|---|---|---|---|---|---|

| ReSE2NSE v1 | Digi XBee-Pro, TI MSP430F213 | 16MHz | 512B | 8KB+256B | 16-bit MSP-430 |

| ReSE2NSE v2 | ATSAMR21 | <48MHz | 32KB | 256KB | ARM32-Cortex M0 |

To contrast with the above specs, the TinyML recommended model to classify CIFAR-10 has 3.5MB parameters.

Apart from the above constraints, WSNs running AI are placed in potentially changing environments that are potentially different from the environment in which the AI was trained. This is a problem known as concept drift. AI algorithms running on WSN need to be able to work regardless of continuously changing patterns using concepts such as online learning.

Zhou '21 describes edge AI as using widespread edge resources to gain AI insight. This means not only running an AI algorithm on one node, but potentially cooperatively running inference and training on multiple nodes. There are several degrees to the concept, ranging from training and inference in the server, training in the server but inference in the node, and running both training and inference on the node itself. Ideally, for the highest scalability and lowest communication overhead, training and inference ideally take place in the nodes.

Distributed Data Processing Survey

Running ML in WSN can encounter three problems: resource constraints, concept drift and lack of data for certain applications. From our survey, we found concepts for efficient computer vision that can be applied to most neural-network based ML, and further identified HDC as a good resource-efficient ML algorithm that can be hardware-accelerated. Online learning can be performed with as few resources as possible through the use of efficient libraries and heuristics by training only the most important parts of the model.

TinyML and accepted benchmarks for Edge AI

The TinyML organization describes tinyML as the "field of machine learning technologies and applications including hardware, algorithms and software capable of performing on-device sensor data analytics at extremely low power, typically in the mW range and below, and hence enabling a variety of always-on use-cases and targeting battery operated devices".

MLPerfTiny[3] is widely accepted as the common benchmarking requirement for TinyML works. It prescribes basic accuracy targets along with suggested models (if benchmarking hardware) for 4 selected basic use cases for TinyML as shown in the table below.

| Use Case | Dataset | Suggested Model | Quality Target |

|---|---|---|---|

| Visual Wakewords | MSCOCO | MobileNetv1 | 80% (top-1) |

| Keyword Spotting | Google Speech Commands | DS-CNN | 90% (top-1) |

| Image Classification | CIFAR-10 | ResNet | 85% (top-1) |

| Anomaly Detection | DCASE2020 | FC-Autoencoder | 85% Area under Curve |

Visual Wakewords is a classification task of telling whether or not a person is in a picture. This covers similar tasks where complex high-resolution data is provided but the conclusions required are simple.

Anomaly detection is a similar task, where . This task covers cases where both the conclusions required and the input data are likely to be simple, but stakes are slightly higher where false positives and negatives must be minimized.

Efficient Software Models

State of the Art: Efficient Vision Models

Traditional ML techniques struggle most with high-dimension image classification tasks. This is the main task for which heavily parametrized resource-heavy neural networks are used. Additionally, similar architectures are applied to reinforcement learning (such as the one for AlphaZero)[4], and so similar efficient architectures are needed for running RL clustering models.

Shown below is a table summarizing the most efficient known vision models.

| Notes | Architecture | Applied to | Parameters | #FLOPs | |

|---|---|---|---|---|---|

| Residual Blocks & Skip Connections | ResNet-18 | CIFAR-10 | 85% (Wan et al.) | 11M | 1800M |

| CIFAR-100 | 82.3% (SAMix Augmented Data) | ||||

| ImageNet | 72.33%/91.8% (SAMix Augmented Data) | ||||

| Linear Bottleneck Blocks | MobileNetv2 | ImageNet | 72% | 3.5M | 300M |

| Efficiently Scaled Model Depth & Width | EfficientNetB0 | CIFAR-10 | 93.52% (Main Paper) (Transfer Learning) | 5.3M | 390M |

| ImageNet | 77.1%/93.3% (Main Paper) | ||||

| Grouped Pointwise Convolutions | kEffNet-B0 16ch | CIFAR-10/100 | 92.24%/71.92% | 0.64M | 129M |

| kMobileNet Large 16ch | CIFAR-10/100 | 92.74%/71.36% | 0.40M | 81M | |

| Neural Architecture Search | MCUNetv2M4 | ImageNet | 64.90% | 0.47M | 119M |

| Neural Architecture Search | MCUNetv2H7 | ImageNet | 71.80% | 0.67M | 256M |

Principles of Efficient Inference

Convolutional neural networks (CNNs) are widely used for any task with data that come with spatial relations, such as images or time-based data.

Empirically, deeper networks (with more layers) are known to perform better on more complicated tasks [5]. However, making networks deeper had a limit where training them is no longer possible after a certain depth is reached. Models such as ResNet with sets of layers that skip connections (known as residual blocks) between layers was introduced to solve this problem allowing for extremely deep networks. [6]

Improving upon that, it was found with MobileNetv2 that models with residual blocks that reduce and then expand the number of channels (known as bottleneck residuals or inverted residuals) allows networks to keep good performance with a much lower parameter count and number of operations.[7]

EfficientNet was developed when researchers attempted to analyze how performance changes in the former networks as you vary parameters of the architecture, like the number of channels or the network depth. They found both ways to improve accuracy by scaling the network up and also ways to improve efficiency by scaling the network down in a way that preserves accuracy.[8]

Works this year (kEffNet, kMobileNet) have found that applying old principles like grouped convolutions and pointwise convolutions to the recent efficient networks further reduce the needed parameters and float operations. [9]

Apart from those, NAS is an approach where heuristic algorithms are used to find accurate models with parameter count constraints (ProxylessNAS, MCUNet) by efficiently estimating the probability of high accuracy (on a specific task) from a candidate model before training and optimizing search spaces.[10][11]

Running efficient software in small hardware

State of the Art: Models demonstrated in microcontrollers

Microcontrollers are devices that can be used for IoT and also as wireless sensors. Works in the TinyML field tend to demonstrate their works on resource-constrained microcontrollers, the best of whom are summarized here.

The table below summarizes the best demonstrations submitted to MLCommons: Tiny Inference. The most efficient work by far is by a digital accelerator, part of the open division, which used custom models (not the prescribed models) and special high level synthesis (HLS) on an FPGA, and is work by CERN. [12]

| Application | Accuracy/AUC/Dist | Inference Latency | Energy Per Inference | Software Stack | Device, Specs | ||||

| Device | CLK | Memory + Secondary | Processor | Voltage | |||||

| VWW | 80% | 151.63ms | 4.03mJ | X-Cube-AI v.7.1.0 | Nucleo-U575ZI-Q | 160 MHz | 768k + 2M | ARM32CM33 | 1.8V / SMPS |

| CF10 | 85% | 158.13ms | 4.15mJ | ||||||

| Speech Commands | 90% | 54.81ms | 1.48mJ | ||||||

| ToyADMOS Car | 85% | 5.73ms | 0.152mJ | ||||||

| VWW | 80% | 186ms | 1.721mJ | Silicon Labs Gecko SDK/ TFLite Micro | Silicon Labs xG24-DK2601B | 78 MHz | 256k + 1.5M | ARM32CM33 w/ FPU + DSP + TrustZone | 1.8V |

| CF10 | 85% | 240ms | 2.248mJ | ||||||

| Speech Commands | 90% | 63.1ms | 0.611mJ | ||||||

| ToyADMOS Car | 85% | 5.41ms | 0.045mJ | ||||||

| CF10 | 84.5% | 1.5ms | 2.535mJ | FINN | Xilinx PynqZ2 | 100 MHz / 650 MHz | + 128M | Dual Core ARM, Cortex-A9 MPCore | ? |

| Speech Commands | 82.5% | 0.033ms | 0.0537mJ | FINN | ? | ||||

| ToyADMOS Car | 83% | 0.019ms | 0.03mJ | hls4ml | ? | ||||

In addition to the above, MIT's Han Lab has a lot of MLPerfTiny-passing works demonstrated on very small microcontrollers, as tabulated below.

| Accuracy | Latency | Software Stack | Device | CLK | Memory + Secondary | Processor | Voltage | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| MCUNet1 | MCUNet | INT4 | ImageNet | 62 | TinyEngine | STM32F412 | 100 MHz | 256kB + 1M | ARM32CM4 | 1.7V-3.6V | |

| 63.5 | STM32F746 | 216 MHz | 320k + 1M | ARM32CM7 | 1.7V-3.6V | ||||||

| 65.9 | STM32F765 | 216 MHz | 512k + 1M | ARM32CM7 | 1.7V-3.6V | ||||||

| 70.7 | STM32H743 | 480 MHz | 512k + 1M | ARM32CM7 | 1.7V-3.6V | ||||||

| INT8 | VWW | 92 | 880ms | STM32F746 | 216 MHz | 320k + 1M | ARM32CM7 | 1.7V-3.6V | |||

| 88.7 | 200ms | ||||||||||

| 87 | 100ms | ||||||||||

| INT8 | Speech Commands | 96 | 1105ms | ||||||||

| MCUNet2 | MCUNet2 | INT8 | ImageNet | 71.8 | STM32H743 | 480 MHz | 512k + 2M | ARM32CM7 | 1.7V-3.6V | ||

| STM32F412 | 100 MHz | 256kB + 1M | ARM32CM4 | 1.7V-3.6V | |||||||

| 64.9 |

Hyperdimensional Computing[13]

Hyperdimensional computing (HDC) is an ML paradigm where sets of data are encoded into a single high-dimensional vector (hypervector or HV). If the HV is encoded properly, HVs of closely related data will be very similar to each other and very different from HVs of unrelated data. This property is taken advantage of for the ML task of clustering or classification. HDC is very efficient not only because of its single-operation classification, but also because it can be reduced into binary precision for which efficient dedicated accelerators can be made.

The general architecture of an HDC is shown in Figure 2. The mapping module assigns or maps a hypervector to each feature of the system. These hypervectors are then manipulated in the encoding module using the bundling and binding operations. During the training phase, the result of the encoding module is stored in the associative memory, where each class or category has a unique class hypervector. During the inference or testing phase, the result of the encoding module is compared with the stored hypervectors in the associative memory. The class with the highest similarity becomes the predicted class of the input.

Online learning & Learning with little data

For some tasks, the ML model may need to be retrained on the spot on new data to account for changes in the environment or, more realistically, lack of sensor calibration for wireless sensor nodes. This task is known as Online Learning. Training is much more resource intensive than inference, making this task more challenging. This is addressed by using much more efficient ML models such as the ones surveyed leading to much less training parameters needed or by using efficient training software like that of MCUNet’s TinyLearningEngine.

If enough data is available to pretrain a WSN, the technique called transfer learning and classifier tuning [14]can be used. By pretraining a network on a general but difficult task, then freezing (not training) parameters of the earlier layers while fine-tuning or retraining only the last few layers of the AI model, online learning can be done much faster with data and using fewer resources.

If available data is not enough, the concept of explicit memory from memory augmented neural networks[15] can be used to store intermediate representations of data which can be compared to representation of new data to quickly learn to classify them.

In some extreme cases, we may desire to use WSNs in tasks for which no available related dataset exists. In this case, the ML algorithm to be used inside the WSN nodes must be trained with data on-the-spot. However, a weak WSN node on its own cannot train a full model. Federated learning[16] allows a heterogeneous set of computing nodes to collectively train a model using data that is obtained by the nodes themselves. Federated learning works by running similar online learning AI model architectures called the “backbone” in many devices at once, then sending individual devices’ model updates (that is, parameters or gradients thereof) to a central host and then aggregating them. A powerful trained model can be created in the host which can then distribute the better model.

CIDR Distributed Learning Simulation Framework

To introduce uniformity, add reproducibility, and interpretability to the simulations and the simulation code, we designed a simulation framework which we call the CIDR distributed learning (CIDR DL) simulation framework. The framework applies a Python object-oriented frontend to the necessary tools, like Pytorch[17] for tensor computations and Flower[18] for federated learning. Later on, we plan to include quantization and efficient inference compilers like MCUNet off the box.

The current structure, along with the class attributes that are yet to be implemented (TBI), are shown in Figure 3. In the framework, the base object is a distributed processing node (dp_node) which can contain a distributed processing model (dp_model) along with a communications module (comms_module) and a module that handles the federated learning tasks (federated_module). The dp_model class contains all of the objects necessary to run the machine learning hardware. As such, it is the one that uses Pytorch. The comms_module class will later simulate the algorithms for clustering and communication. Structuring it this way will allow us to instantiate multiple distributed processing nodes and simulate the function on the WSN network using a network simulator.

References

- ↑ Alsheikh, Mohammad Abu, et al. "Machine learning in wireless sensor networks: Algorithms, strategies, and applications." IEEE Communications Surveys & Tutorials 16.4 (2014): 1996-2018.

- ↑ Ma, Dong, et al. "Sensing, computing, and communications for energy harvesting iots: A survey." IEEE Communications Surveys & Tutorials 22.2 (2019): 1222-1250.

- ↑ Banbury, Colby, et al. "Mlperf tiny benchmark." arXiv preprint arXiv:2106.07597 (2021).

- ↑ Silver, David, et al. "A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play." Science 362.6419 (2018): 1140-1144.

- ↑ He, Kaiming, et al. "Deep residual learning for image recognition." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

- ↑ He, Kaiming, et al. "Deep residual learning for image recognition." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

- ↑ Sandler, Mark, et al. "Mobilenetv2: Inverted residuals and linear bottlenecks." Proceedings of the IEEE conference on computer vision and pattern recognition. 2018.

- ↑ Tan, Mingxing, and Quoc Le. "Efficientnet: Rethinking model scaling for convolutional neural networks." International conference on machine learning. PMLR, 2019.

- ↑ Schuler, Joao Paulo Schwarz, et al. "Grouped Pointwise Convolutions Reduce Parameters in Convolutional Neural Networks." MENDEL. Vol. 28. No. 1. 2022.

- ↑ Lin, Ji, et al. "Mcunet: Tiny deep learning on iot devices." Advances in Neural Information Processing Systems 33 (2020): 11711-11722.

- ↑ Cai, Han, Ligeng Zhu, and Song Han. "Proxylessnas: Direct neural architecture search on target task and hardware." arXiv preprint arXiv:1812.00332 (2018).

- ↑ Borras, Hendrik, et al. "Open-source FPGA-ML codesign for the MLPerf Tiny Benchmark." arXiv preprint arXiv:2206.11791 (2022).

- ↑ Kanerva, P. (2009). Hyperdimensional Computing: An Introduction to Computing in Distributed Representation with High-Dimensional Random Vectors. Cognitive Computation, 1(2), 139–159.

- ↑ Ji Lin et al. “On-device training under 256kb memory”. In: arXiv preprint arXiv:2206.15472 (2022).

- ↑ Geethan Karunaratne et al. “Robust high-dimensional memory-augmented neural networks”. In: Na- ture communications 12.1 (2021), p. 2468.

- ↑ Brendan McMahan et al. “Communication-efficient learning of deep networks from decentralized data”. In: Artificial intelligence and statistics. PMLR. 2017, pp. 1273–1282.

- ↑ PySyft. url: https://github.com/OpenMined/PySyft.

- ↑ Ekdeep Singh Lubana et al. “Orchestra: Unsupervised federated learning via globally consistent clustering”. In: arXiv preprint arXiv:2205.11506 (2022).