Distributed learning

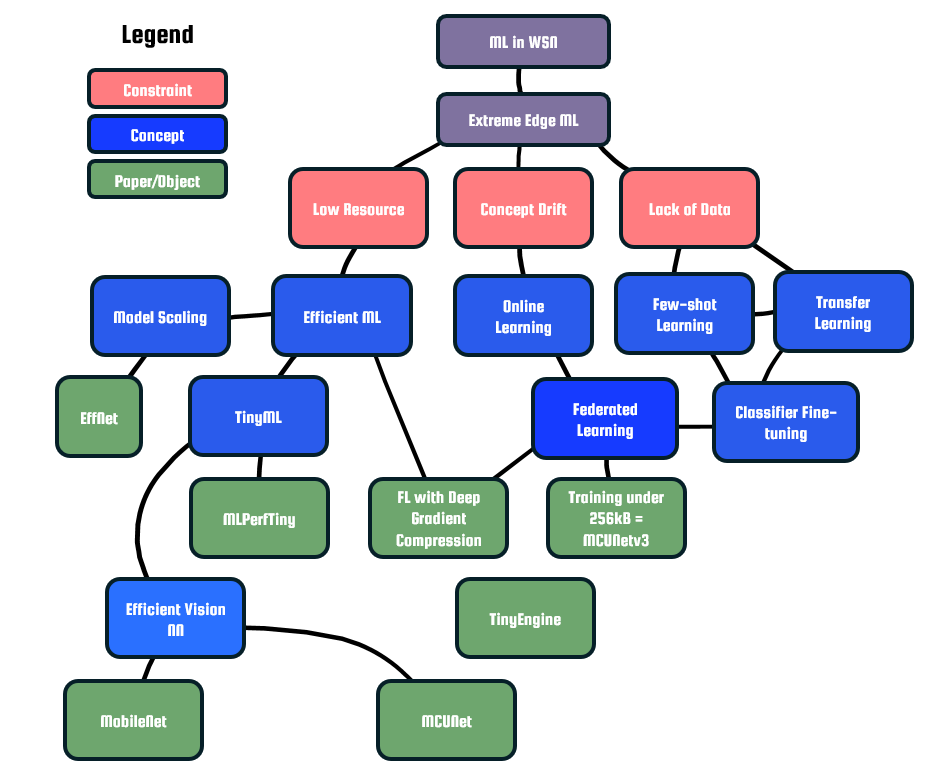

The use of artificial intelligence (AI) in extreme edge devices such as wireless sensor nodes (WSNs) will greatly benefit its scalability and application space. AI can be applied to solve problems with clustering, data routing, and most importantly it can be used to reduce the volume of data transmission via data compression or making conclusions from data within the node itself [1].

At the moment, potential use cases for ML include, but are not limited to:

- Pattern Classification (including recognizing speech commands, voice recognition for authentication, bio-signal processing for medical sensors)

- Image Processing (including presence detection a.k.a. visual wake words, car counting for traffic analytics)

- Node routing and scheduling (see more in clustering)

However, since devices in the extreme edge are constrained to work with extremely low amounts of energy [2], even the simplest AI models are difficult to execute with typical sequential processors. WSNs have memories in the order of kB and clock speeds in the order of kHz to MHz due to energy constraints, rendering them unable to run state-of-the art AI applications.

| Reference WSN Project | Device | CLK | Memory | Secondary Memory | Processor |

|---|---|---|---|---|---|

| ReSE2NSE v1 | Digi XBee-Pro, TI MSP430F213 | 16MHz | 512B | 8KB+256B | 16-bit MSP-430 |

| ReSE2NSE v2 | ATSAMR21 | <48MHz | 32KB | 256KB | ARM32-Cortex M0 |

To contrast with the above specs, the TinyML recommended model to classify CIFAR-10 has 3.5MB parameters.

Apart from the above constraints, WSNs running AI are placed in potentially changing environments that are potentially different from the environment in which the AI was trained. This is a problem known as concept drift. AI algorithms running on WSN need to be able to work regardless of continuously changing patterns using concepts such as online learning.

Zhou '21 describes edge AI as using widespread edge resources to gain AI insight. This means not only running an AI algorithm on one node, but potentially cooperatively running inference and training on multiple nodes. There are several degrees to the concept, ranging from training and inference in the server, training in the server but inference in the node, and running both training and inference on the node itself. Ideally, for the highest scalability and lowest communication overhead, training and inference ideally take place in the nodes.

TinyML

The TinyML organization describes tinyML as the "field of machine learning technologies and applications including hardware, algorithms and software capable of performing on-device sensor data analytics at extremely low power, typically in the mW range and below, and hence enabling a variety of always-on use-cases and targeting battery operated devices".

MLPerfTiny[3] is widely accepted as the common benchmarking requirement for TinyML works. It prescribes basic accuracy targets for 4 selected basic use cases for TinyML as shown in Table.

| Use Case | Dataset | Specified Model | Quality Target |

|---|---|---|---|

| Visual Wakewords | MSCOCO | MobileNetv1 | 80% (top-1) |

| Keyword Spotting | Google Speech Commands | DS-CNN | 90% (top-1) |

| Image Classification | CIFAR-10 | ResNet | 85% (top-1) |

| Anomaly Detection | DCASE2020 | FC-Autoencoder | 85% Area under Curve |

Concept Drift

A recent approach known as federated learning[4] was put forth to solve privacy issues when training deep neural network (DNN) models, but can also be applied. By aggregating local updates to model parameters in a central host, a powerful trained model can be created in the host which can then distribute the better model.

- ↑ Alsheikh, Mohammad Abu, et al. "Machine learning in wireless sensor networks: Algorithms, strategies, and applications." IEEE Communications Surveys & Tutorials 16.4 (2014): 1996-2018.

- ↑ Ma, Dong, et al. "Sensing, computing, and communications for energy harvesting iots: A survey." IEEE Communications Surveys & Tutorials 22.2 (2019): 1222-1250.

- ↑ Banbury, Colby, et al. "Mlperf tiny benchmark." arXiv preprint arXiv:2106.07597 (2021).

- ↑ McMahan, Brendan, et al. "Communication-efficient learning of deep networks from decentralized data." Artificial intelligence and statistics. PMLR, 2017.