Distributed learning

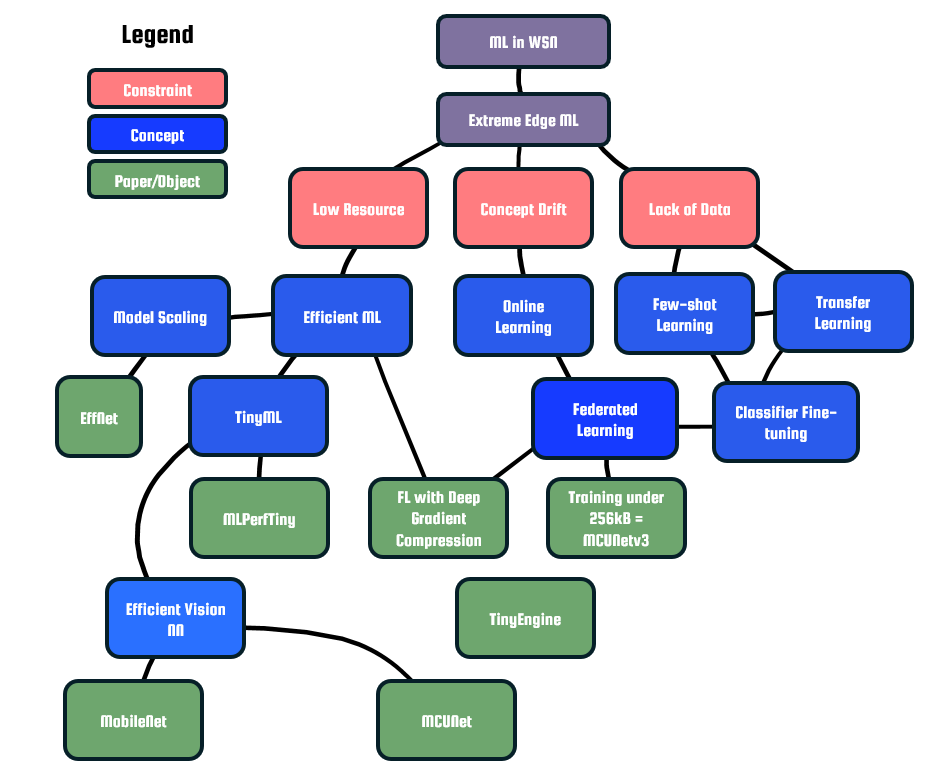

The use of artificial intelligence (AI) in extreme edge devices such as wireless sensor nodes (WSNs) will greatly benefit its scalability and application space. AI can be applied to solve problems with clustering, data routing, and most importantly it can be used to reduce the volume of data transmission via data compression or making conclusions from data within the node itself [1].

At the moment, potential use cases for ML in WSN include, but are not limited to:

- General Pattern Classification (including recognizing speech commands, voice recognition for authentication, bio-signal processing for medical sensors)

- Image Processing (including presence detection a.k.a. visual wake words, car counting for traffic analytics)

- Node routing and scheduling to improve network scalability and lifespan (through the use of RL, see more in clustering).

However, since devices in the extreme edge are constrained to work with extremely low amounts of energy [2], even the simplest AI models are difficult to execute with typical sequential processors. WSNs have memories in the order of kB and clock speeds in the order of kHz to MHz due to energy constraints, rendering them unable to run state-of-the art AI applications.

| Reference WSN Project | Device | CLK | Memory | Secondary Memory | Processor |

|---|---|---|---|---|---|

| ReSE2NSE v1 | Digi XBee-Pro, TI MSP430F213 | 16MHz | 512B | 8KB+256B | 16-bit MSP-430 |

| ReSE2NSE v2 | ATSAMR21 | <48MHz | 32KB | 256KB | ARM32-Cortex M0 |

To contrast with the above specs, the TinyML recommended model to classify CIFAR-10 has 3.5MB parameters.

Apart from the above constraints, WSNs running AI are placed in potentially changing environments that are potentially different from the environment in which the AI was trained. This is a problem known as concept drift. AI algorithms running on WSN need to be able to work regardless of continuously changing patterns using concepts such as online learning.

Zhou '21 describes edge AI as using widespread edge resources to gain AI insight. This means not only running an AI algorithm on one node, but potentially cooperatively running inference and training on multiple nodes. There are several degrees to the concept, ranging from training and inference in the server, training in the server but inference in the node, and running both training and inference on the node itself. Ideally, for the highest scalability and lowest communication overhead, training and inference ideally take place in the nodes.

TinyML and accepted benchmarks for Edge AI

The TinyML organization describes tinyML as the "field of machine learning technologies and applications including hardware, algorithms and software capable of performing on-device sensor data analytics at extremely low power, typically in the mW range and below, and hence enabling a variety of always-on use-cases and targeting battery operated devices".

MLPerfTiny[3] is widely accepted as the common benchmarking requirement for TinyML works. It prescribes basic accuracy targets along with suggested models (if benchmarking hardware) for 4 selected basic use cases for TinyML as shown in the table below.

| Use Case | Dataset | Suggested Model | Quality Target |

|---|---|---|---|

| Visual Wakewords | MSCOCO | MobileNetv1 | 80% (top-1) |

| Keyword Spotting | Google Speech Commands | DS-CNN | 90% (top-1) |

| Image Classification | CIFAR-10 | ResNet | 85% (top-1) |

| Anomaly Detection | DCASE2020 | FC-Autoencoder | 85% Area under Curve |

Visual Wakewords is a classification task of telling whether or not a person is in a picture. This covers similar tasks where complex high-resolution data is provided but the conclusions required are simple.

Anomaly detection is a similar task, where . This task covers cases where both the conclusions required and the input data are likely to be simple, but stakes are slightly higher where false positives and negatives must be minimized.

Efficient Software Models

State of the Art: Efficient Vision Models

Traditional ML techniques struggle most with high-dimension image classification tasks. This is the main task for which heavily parametrized resource-heavy neural networks are used. Additionally, similar architectures are applied to reinforcement learning (such as the one for AlphaZero)[4], and so similar efficient architectures are needed for running RL clustering models.

Shown below is a table summarizing the most efficient known vision models.

| Notes | Architecture | Applied to | Parameters | #FLOPs | |

|---|---|---|---|---|---|

| Deep Residual Learning | ResNet-18 | CIFAR-10 | 85% (Wan et al.) | 11M | 1800M |

| CIFAR-100 | 82.3% (SAMix Augmented Data) | ||||

| ImageNet | 72.33%/91.8% (SAMix Augmented Data) | ||||

| MobileNet | MobileNetv2 | ImageNet | 72% | 3.5M | 300M |

| Baseline Effnet | EfficientNetB0 | CIFAR-10 | 93.52% (Main Paper) (Transfer Learning) | 5.3M | 390M |

| ImageNet | 77.1%/93.3% (Main Paper) | ||||

| Grouped Pointwise Convolutions | kEffNet-B0 16ch | CIFAR-10/100 | 92.24%/71.92% | 0.64M | 129M |

| kMobileNet Large 16ch | CIFAR-10/100 | 92.74%/71.36% | 0.40M | 81M | |

| Patch-based Inference | MCUNetv2M4 | ImageNet | 64.90% | 0.47M | 119M |

| Patch-based Inference | MCUNetv2H7 | ImageNet | 71.80% | 0.67M | 256M |

Principles of Efficient Inference

Convolutional neural networks (CNNs) are widely used for any task with data that come with spatial relations, such as images or time-based data.

Empirically, deeper networks (with more layers) are known to perform better on more complicated tasks [5]. However, making networks deeper had a limit where training them is no longer possible after a certain depth is reached. Models such as ResNet with sets of layers that skip connections (known as residual blocks) between layers was introduced to solve this problem allowing for extremely deep networks. [6]

Improving upon that, it was found with MobileNetv2 that models with residual blocks that reduce and then expand the number of channels (known as bottleneck residuals or inverted residuals) allows networks to keep good performance with a much lower parameter count and number of operations.[7]

EfficientNet was developed when researchers attempted to analyze how performance changes in the former networks as you vary parameters of the architecture, like the number of channels or the network depth. They found both ways to improve accuracy by scaling the network up and also ways to improve efficiency by scaling the network down in a way that preserves accuracy.[8]

Works this year (kEffNet, kMobileNet) have found that applying old principles like grouped convolutions and pointwise convolutions to the recent efficient networks further reduce the needed parameters and float operations. [9]

Apart from those, NAS is an approach where heuristic algorithms are used to find accurate models with parameter count constraints (ProxylessNAS, MCUNet) by efficiently estimating the probability of high accuracy (on a specific task) from a candidate model before training and optimizing search spaces.[10][11]

Running efficient software in small hardware

State of the Art: Models demonstrated in microcontrollers

Microcontrollers are devices that can be used for IoT and also as wireless sensors. Works in the TinyML field tend to demonstrate their works on resource-constrained microcontrollers, the best of whom are summarized here.

The table below summarizes the best demonstrations submitted to MLCommons: Tiny Inference. The most efficient work by far is by a digital accelerator, part of the open division, which used custom models (not the prescribed models) and special high level synthesis (HLS) on an FPGA, and is work by CERN. [12]

| Application | Accuracy/AUC/Dist | Inference Latency | Energy Per Inference | Software Stack | Device, Specs | ||||

| Device | CLK | Memory + Secondary | Processor | Voltage | |||||

| VWW | 80% | 151.63ms | 4.03mJ | X-Cube-AI v.7.1.0 | Nucleo-U575ZI-Q | 160 MHz | 768k + 2M | ARM32CM33 | 1.8V / SMPS |

| CF10 | 85% | 158.13ms | 4.15mJ | ||||||

| Speech Commands | 90% | 54.81ms | 1.48mJ | ||||||

| ToyADMOS Car | 85% | 5.73ms | 0.152mJ | ||||||

| VWW | 80% | 186ms | 1.721mJ | Silicon Labs Gecko SDK/ TFLite Micro | Silicon Labs xG24-DK2601B | 78 MHz | 256k + 1.5M | ARM32CM33 w/ FPU + DSP + TrustZone | 1.8V |

| CF10 | 85% | 240ms | 2.248mJ | ||||||

| Speech Commands | 90% | 63.1ms | 0.611mJ | ||||||

| ToyADMOS Car | 85% | 5.41ms | 0.045mJ | ||||||

| CF10 | 84.5% | 1.5ms | 2.535mJ | FINN | Xilinx PynqZ2 | 100 MHz / 650 MHz | + 128M | Dual Core ARM, Cortex-A9 MPCore | ? |

| Speech Commands | 82.5% | 0.033ms | 0.0537mJ | FINN | ? | ||||

| ToyADMOS Car | 83% | 0.019ms | 0.03mJ | hls4ml | ? | ||||

In addition to the above, MIT's Han Lab has a lot of MLPerfTiny-passing works demonstrated on very small microcontrollers, as tabulated below.

| Accuracy | Latency | Software Stack | Device | CLK | Memory + Secondary | Processor | Voltage | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| MCUNet1 | MCUNet | INT4 | ImageNet | 62 | TinyEngine | STM32F412 | 100 MHz | 256kB + 1M | ARM32CM4 | 1.7V-3.6V | |

| 63.5 | STM32F746 | 216 MHz | 320k + 1M | ARM32CM7 | 1.7V-3.6V | ||||||

| 65.9 | STM32F765 | 216 MHz | 512k + 1M | ARM32CM7 | 1.7V-3.6V | ||||||

| 70.7 | STM32H743 | 480 MHz | 512k + 1M | ARM32CM7 | 1.7V-3.6V | ||||||

| INT8 | VWW | 92 | 880ms | STM32F746 | 216 MHz | 320k + 1M | ARM32CM7 | 1.7V-3.6V | |||

| 88.7 | 200ms | ||||||||||

| 87 | 100ms | ||||||||||

| INT8 | Speech Commands | 96 | 1105ms | ||||||||

| MCUNet2 | MCUNet2 | INT8 | ImageNet | 71.8 | STM32H743 | 480 MHz | 512k + 2M | ARM32CM7 | 1.7V-3.6V | ||

| STM32F412 | 100 MHz | 256kB + 1M | ARM32CM4 | 1.7V-3.6V | |||||||

| 64.9 |

Online learning & Learning with little data

As mentioned before, the AI model running on the WSN needs to be able to adapt to long-term changes in the sensing environment. To do this, one has to update the AI model based on new inputs. This puts special constraints on model requirements:

- The AI must be able to do model updates in an unsupervised fashion, as it is infeasible to have labelled data on the edge.

- The AI and online learning algorithm pair must not overfit, or erroneously update to misclassified data.

- As training is known to be much more resource heavy than inference, the training algorithm must be efficient.

Additionally, a major requirement of AI algorithms is the presence of large sets of data (labeled or unlabeled, depending on the application). For novel applications, the datasets may not yet exist such as for kinetic energy harvesting patterns for microelectromechanical (MEMS) harvesting nodes. There is a high work overhead with respect to deploying nodes to gather beforehand, and it will be hard to create effective AI that generalize from only loosely related public datasets.

Transfer Learning & Classifier Fine Tuning

By pre-training a heavy feature extractor and a much lighter classifier on a general but difficult task (ImageNet 1000-class classification for vision networks, for example), and then further using this pre-trained network on an easier task, it can be made to converge (for the easier task) much faster with less data.

This scheme can be made to use much less resources by freezing (not training) parameters of earlier layers (or maybe the feature extractor as a whole, if not using a DNN), as later layers are empirically found to contribute much better to accuracy. This scheme is demonstrated in Han Lab's MCUNetv3 [13]. By analyzing marginal accuracy gains from freezing different parameters, they found heuristics that train models to near-original performances with tight resource constraints (under 256kB memory).

Federated Learning

A recent approach known as federated learning[14] was put forth to solve privacy issues when training deep neural network (DNN) models, but can also be applied. By aggregating local updates to model parameters in a central host, a powerful trained model can be created in the host which can then distribute the better model.

Simulation Methodologies

To confirm the applicability principles of efficient inference, learning and distributed processing, we can run simulations and measure memory footprint, communication overhead, and number of multiply-add operations needed. From the available ML frameworks, we use PyTorch because that’s what UCL uses.

To run a federated learning (distributed processing) simulation, one can do the following:

- Host multiple Pytorch models

- Give separate data batches to each model

- Send the gradients from those models to train a global model (optionally, with a selection methodology)

- Aggregate gradients of each model (through also some methodology)

To compare, one can run a baseline model trained using all batches alone. The metrics to analyze are:

- Loss function convergence speed

- Final accuracy

- Total communication overhead (in MB)

References

- ↑ Alsheikh, Mohammad Abu, et al. "Machine learning in wireless sensor networks: Algorithms, strategies, and applications." IEEE Communications Surveys & Tutorials 16.4 (2014): 1996-2018.

- ↑ Ma, Dong, et al. "Sensing, computing, and communications for energy harvesting iots: A survey." IEEE Communications Surveys & Tutorials 22.2 (2019): 1222-1250.

- ↑ Banbury, Colby, et al. "Mlperf tiny benchmark." arXiv preprint arXiv:2106.07597 (2021).

- ↑ Silver, David, et al. "A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play." Science 362.6419 (2018): 1140-1144.

- ↑ He, Kaiming, et al. "Deep residual learning for image recognition." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

- ↑ He, Kaiming, et al. "Deep residual learning for image recognition." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

- ↑ Sandler, Mark, et al. "Mobilenetv2: Inverted residuals and linear bottlenecks." Proceedings of the IEEE conference on computer vision and pattern recognition. 2018.

- ↑ Tan, Mingxing, and Quoc Le. "Efficientnet: Rethinking model scaling for convolutional neural networks." International conference on machine learning. PMLR, 2019.

- ↑ Schuler, Joao Paulo Schwarz, et al. "Grouped Pointwise Convolutions Reduce Parameters in Convolutional Neural Networks." MENDEL. Vol. 28. No. 1. 2022.

- ↑ Lin, Ji, et al. "Mcunet: Tiny deep learning on iot devices." Advances in Neural Information Processing Systems 33 (2020): 11711-11722.

- ↑ Cai, Han, Ligeng Zhu, and Song Han. "Proxylessnas: Direct neural architecture search on target task and hardware." arXiv preprint arXiv:1812.00332 (2018).

- ↑ Borras, Hendrik, et al. "Open-source FPGA-ML codesign for the MLPerf Tiny Benchmark." arXiv preprint arXiv:2206.11791 (2022).

- ↑ Lin, Ji, et al. "On-Device Training Under 256KB Memory." arXiv preprint arXiv:2206.15472 (2022).

- ↑ McMahan, Brendan, et al. "Communication-efficient learning of deep networks from decentralized data." Artificial intelligence and statistics. PMLR, 2017.